Automating Design System Updates

Our design system website was manually updated for years. This made sense in the beginning — we were just beginning to formalize a design system. As we added a significant number of React components (42 separate repos/component packages at its largest), this became untenable, especially once I became the only maintainer.

Developers couldn't trust that the components they saw on the site were an accurate reflection of the latest, as it was hard to even track when updates were made. I couldn't take 20 minutes out of my day to get the website updated every time someone merged a component change even if I wanted to.

This manual process was also a risk for the design system itself. If I got hit by a bus, there was a good chance that no one would take over to keep updating the website. It's an annoying process that distracts from other priorities, and as an organization we didn't have a strong history of prioritizing documentation.

Goals #

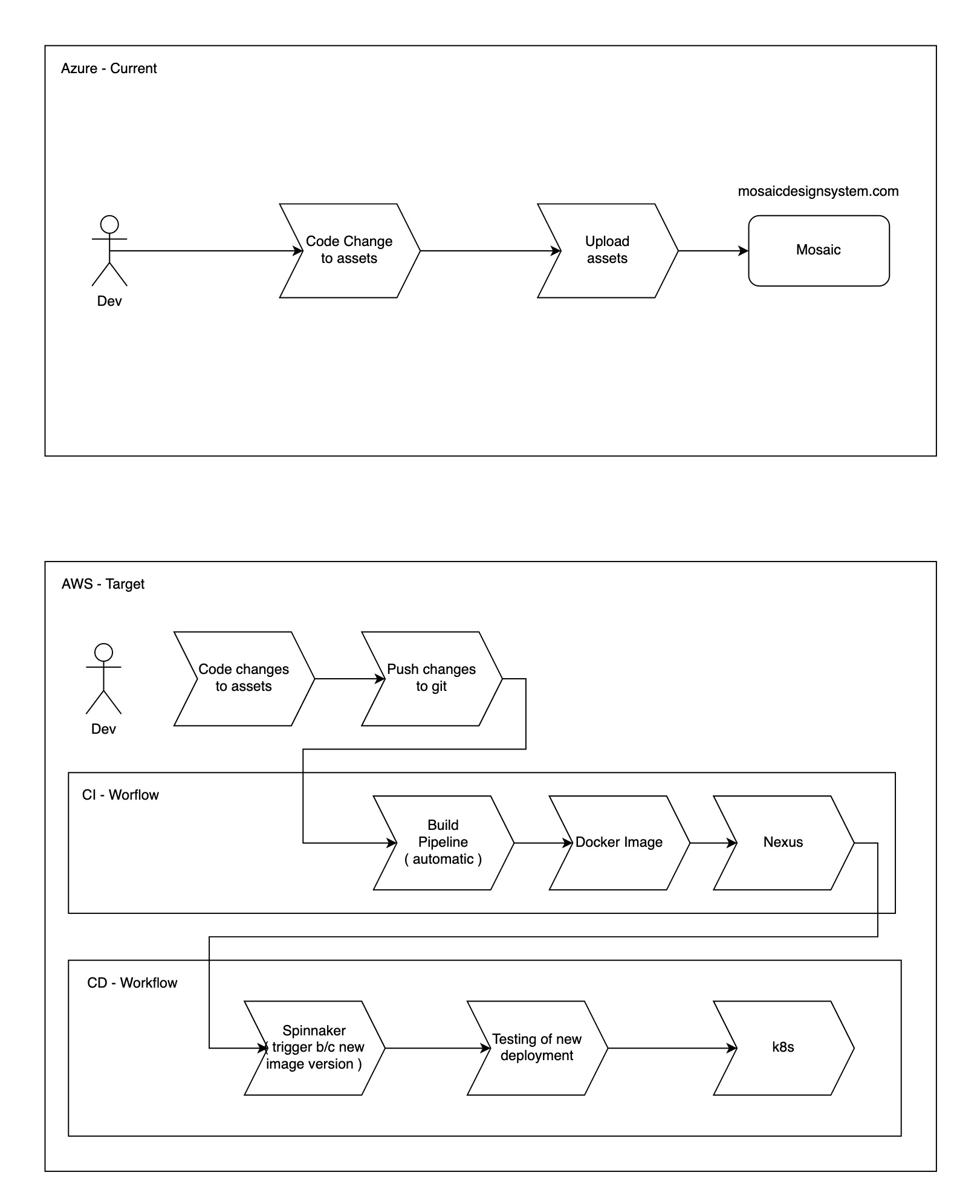

- Implement a CI/CD pipeline for the website to avoid manual FTP uploads

- Automatically update the website every time a new version of our core styles or our design system components was published. This way there would be no question that the website was showing the current state of the system.

Process #

I was the only person dedicated to the design system, and the other members of the tools team I was on was on (who had experience with our CI/CD standards) were swamped with their own work. So the only way this was going to get done is if the bulk of the effort fell to me. I first worked with our architect to figure out a plan, as deployment was not something I had any experience with.

Knowing nothing about Docker up front, and having access to Udemy's online learning content through work, my first steps were to work through a short course on Docker. This took a bit longer than usual because it was such an unfamiliar topic and I was taking notes to make sure I understood everything. After that I worked through a tutorial on how to Dockerize a basic Create React app. This gave me a great reference point to work with. With a bit of experimentation and messing around with Nginx, I got the website and component library containerized and pushed up to our artifact repository. So I was able to get the continuous integration part of the plan implemented without too much trouble.

Next up was the deployment part. I spent some time learning about Kubernetes and Spinnaker, as they were our technologies of choice for deployment. Unfortunately, it proved too complex for me to make meaningful progress on my own. Documentation about our specific setup and standards was sparse. A teammate with a lot of Spinnaker experience kindly stepped in and helped get automatic deployments working.

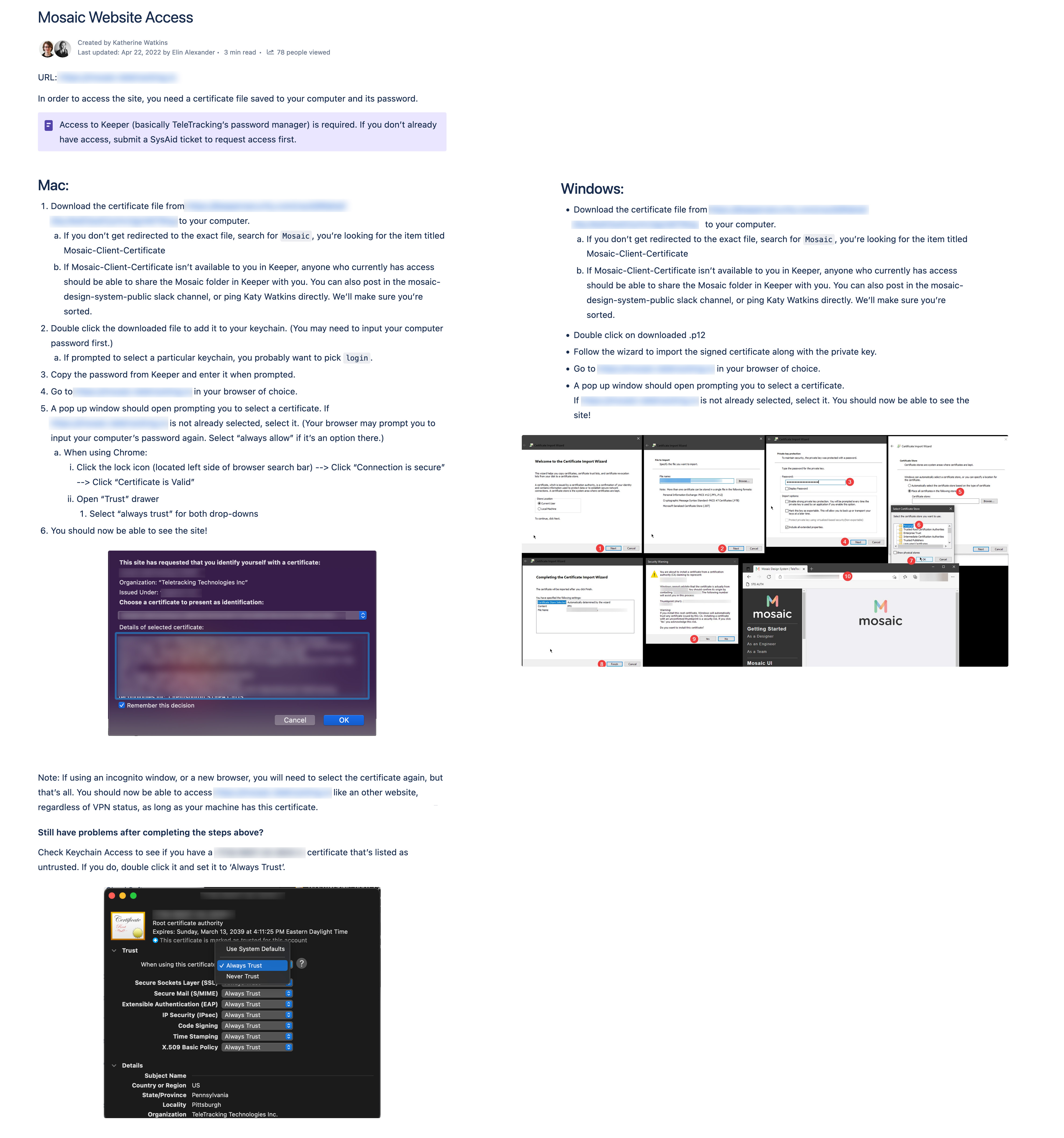

An unexpected result of this new deployment pipeline was a change in authentication. Our previous site required a connection to our VPN, and displayed a generic error about assets not existing if you weren't on it. It turned out that VPN authentication for this new cluster would not be immediately feasible. Instead, our users would need to install a certificate on their machines. This would be ideal in the long run as the VPN requirement often resulted in people asking me if the website was down. A certificate would allow us to set something once and forget it, but I was concerned about the overhead for designers and really anyone who wasn't a developer.

So I took a brief detour at this point to write up and test out instructions to ensure anyone in the company could access the site once we transitioned to the new version with CI/CD.

Once deployment of the website was automated and initial access instructions were documented, I could get to work solving the problem I was most excited about: Updating the website every time our components or core styles package was updated.

Thankfully Bitbucket has a pipe to trigger a pipeline in a specific repo, so this turned out to be easier than I expected. I updated the build process of all of our design system repos to trigger a new website build after changes were merged and pushed up to our artifact repository. Those component repos passed along three pieces of data to the website build:

$COMPONENT (boolean)

$REPO_NAME (string)

$VERSION (string)

Using this data, I wrote a Bash script for the website pipeline. If our core styles were updated it would bump the version in both the component library and the documentation section. If it was just a component update, it would check to see if that component was a dependency in the component library. (Our component library cloned each component package in its build process, so updates to the displayed components were already handled.) Then it would generate a changelog message and version file, and commit the changes to master. This would then trigger the standard pipeline for the website, ensuring a new image was built, pushed to our artifact repository, and deployed.

Results #

This was a tremendous relief for me, and a huge improvement for the long-term viability of our design system. On average in a 6-month period this setup saved me approximately 15 hours. More importantly, there was no longer a question about if the website was up-to-date. This also freed me up to focus on another systemic problem with our system: versioning.